The products used in the Cisco Data Center Architecture

Today's blog post will be about "the products used in the Cisco Data Center Architecture".

In my previous blog post I explained the Cisco Data Center Architecture and this architecture is build with different components/hardware. Every piece of hardware is there for a reason, because the equipment list of the CCIE Data Center track is already known its very easy to determine the products used in the Cisco Data Center.

The products used can be found below, and I also created an hyperlink to the datasheet on the Cisco website.

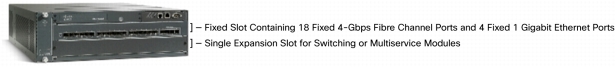

The Cisco MDS 9222i Mutliservice Modular Switch is a platform for deploying high-performance SAN extension solutions with multi-protocol connectivity possibilities.

This device offers 18 x 4 Gbps Fibre Channel ports and 4 x 1 Gigabit Ethernet ports all build in a fixed slot. There is also room for an single Expension Slot for Switching or Multiservice Modules.

The MDS will consume 3U rackspace.

The Nexus 7000 series are switches that modular and designed for mission critical data center usage. The interface speeds that can be offered are scalable up to 10 Gbps (as of this writing date)

The Nexus platform has 3 different chassis 7010 (with room for 10 modules), the 7018 (with room for 18 modules and the 7009 (with room for 9 modules). In the CCIE Data Center lab the Nexus 7009 will be deployed.

This blade is used to provide 10 Gigabit Ethernet connectivity. This line card as 32 ports available and will be placed in the Nexus 7009.

This blade is used to provide 10 Gigabit Ethernet and/or 1 Gigabit Ethernet connectivity. This line card as 32 ports available and will be placed in the Nexus 7009.

Cisco Nexus 5548P Switch

The Nexus 5000 series are typically used as "server access" switches. The 5548P has 32 fixed 1 and/or 10 Gigabit Ethernet ports. These is room to support 1 expansion module.

Cisco Nexus 5548UP Switch

The 5548UP is the same as the 5548P, and the only difference is that the ports and not 1 and/or 10 Gigabit Ethernet, but there are only 10 Gigabit Ethernet ports available.

Cisco Nexus 2232PP

The Nexus 2000 series are used as Fabric Extenders together with other Nexus switches. They are connected to for example the Nexus 5000 series just to provide extra port connectivity in various racks for example.

The Nexus 2232PP has 32 x 1 and/or 10 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE) host interfaces (SFP+) and 8 x 10 Gigabit Ethernet and FCoE fabric interfaces (SFP+)

Cisco Nexus 2232TM

The Nexus 2232TM has 32 x 1 and/or 10GBASE-T host interfaces and modular uplinks (8 x 10 Gigabit Ethernet fabric interfaces (SFP+))

SFP+ is is an enhanced version of the SFP, in that it supports data rates up to 10 Gbit/s and supports 8 Gbit/s Fibre Channel, 10 Gigabit Ethernet and Optical Transport Network standard OTU2.

The Nexus 1000V is a "virtual switch" that works together with the VMware software suite. The Nexus 1000V consist out of 2 components, the VEM which stands for Virtual Ethernet Module (this is where the virtual servers within the vSphere environment will be unlinked to, and the VSM, which stands for Virtual Supervisor Module (this is the supervisor module that can control one or multiple VSM's)

UCS C200 series

There are several UCS models available in the C2xx range I think however the CCIE Data Center lab will only have servers starting from models UCS C220 M3 and above because of the use of the Virtual Interface Cards. The UCS C200 M2 and UCS X210 M2 do not support these cards.

UCS C200 M2 (with the support for two Intel XEON 5500 or 5600 multicore processors, with support up to 192 GB or DDR3 RAM and support for 8 x 2,5 inch or 4 x 3.5 inch hard disks)

UCS C210 M2 (same as the C200 M2 but with the support of more hard disks)

[http://www.cisco.com/en/US/prod/collateral/ps10265/ps10493/ps12369/data_sheet_c78-700626.html UCS C220 M3] (more powerful then the C200 M2 with the support for two Intel XEON E5-2600 multicore processors, with support up to 256 GB or DDR3 RAM, support for 8 x 2,5 hard disks and support for Cisco UCS P81E Virtual Interface Cards (VIC)

UCS C240 M3 (same as the C220 M3 but with support up to 384 GB or DDR3 RAM, support for 24 x 2,5 hard disks)

UCS C250 M2 (same as the C200 M2 but with the support for up to 384 GB or DDR3 RAM, support for 8 x 2,5 hard disks and support for Cisco UCS P81E Virtual Interface Cards (VIC)

UCS C260 M2 (same as the C250 M2 but with support up to 1 TB or DDR3 RAM, support for 16 x 2,5 hard disks)

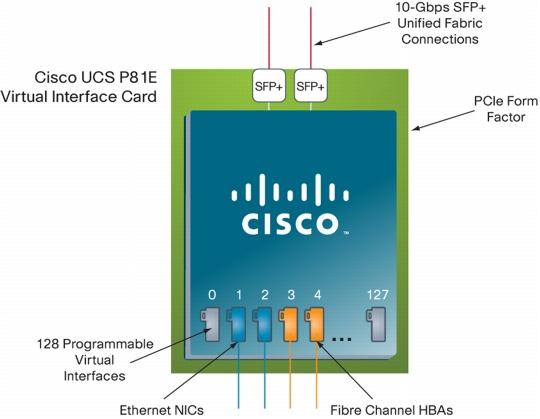

The Cisco UCS P81E Virtual Interface Card is a virtualization-optimized Fibre Channel over Ethernet (FCoE) PCI Express (PCIe) 2.0 x8 10-Gbps adapter designed for use with Cisco UCS C-Series Rack-Mount Servers. The virtual interface card is a dual-port 10 Gigabit Ethernet PCIe adapter that can support up to 128 PCIe standards-compliant virtual interfaces, which can be dynamically configured so that both their interface type (network interface card [NIC] or host bus adapter [HBA]) and identity (MAC address and worldwide name [WWN]) are established using just-in-time provisioning. In addition, the Cisco UCS P81E can support network interface virtualization and Cisco VM-FEX technology.

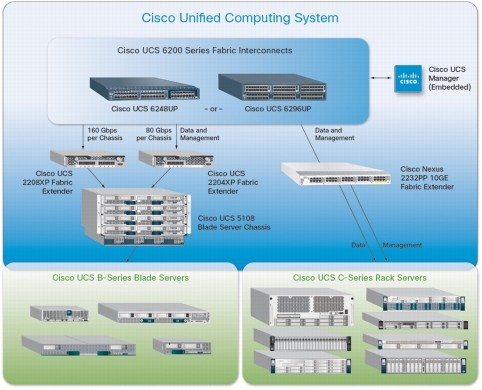

The UCS 6248UP 48-Port Fabric Interconnect is an 48 port switch with 10 Gigabit Ethernet ports that supports FCoE and Fiber Channel. The switch has 32 1 and/or 10-Gbps fixed Ethernet, FCoE and FC ports and one expansion slot. The UCS servers uplinks to these switches.

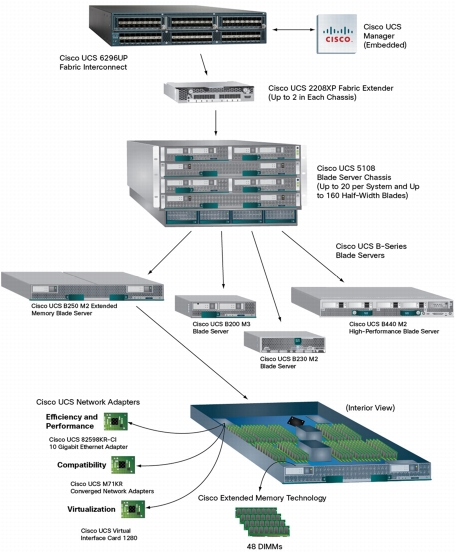

The Cisco UCS 5108 Blade Server Chassis (Figure 2), is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half- and full-width blade form factors.

The UCS B200 M2 are the half blades (with the support for two Intel XEON 5600 multicore processors, with support up to 192 GB or DDR3 RAM and support for 2 x 2,5 inch hard disks)

The UCS B250 M2 are the full blades (with the support for two Intel XEON 5600 multicore processors, with support up to 384 GB or DDR3 RAM and support for 2 x 2,5 inch hard disks)

--> Palo mezzanine card (UCS M81KR)

The Cisco UCS M81KR Virtual Interface Card (VIC) is a virtualization-optimized Fibre Channel over Ethernet (FCoE) mezzanine card designed for use with Cisco UCS B-Series Blade Servers (Figure 1). The VIC is a dual-port 10 Gigabit Ethernet mezzanine card that supports up to 128 Peripheral Component Interconnect Express (PCIe) standards-compliant virtual interfaces that can be dynamically configured so that both their interface type (network interface card [NIC] or host bus adapter [HBA]) and identity (MAC address and worldwide name [WWN]) are established using just-in-time provisioning. In addition, the Cisco UCS M81KR supports Cisco VN-Link technology, which adds server-virtualization intelligence to the network. (So basically the same card as the UCS P81E used for the UCS C 200 models but now for the UCS B models)

Same as the Palo mezzanine card but only a different brand.

With all the UCS's and the 6200's this is a nice picture to see how it all fits together:

The Cisco ACE 4710 offers a broad set of intelligent Layer 4 load-balancing and Layer 7 content-switching technologies that work with IPv4 and IPv6 traffic and are integrated with the latest virtualization and security capabilities. It supports translation between IPv4 and IPv6 traffic, supporting migration to IPv6 and allowing deployments in mixed networks.

Dual attached JBODs

JBOD's (Just A Bunch Of Disks) can literately be anything with up links to the network and disks.

Hope this helped with understanding the second topic of the CCIE Data Center blueprint and my next blog topic will be “Describe Cisco unified I/O solution in access layer”

If you like my blog posts, please let me know below (in a comment) or trough twitter!